Image Captioning

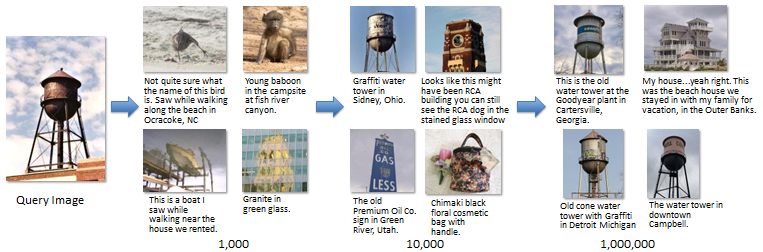

Im2Text: Describing Images Using 1 Million Captioned Photographs

- paper: http://tamaraberg.com/papers/generation_nips2011.pdf

- project: http://vision.cs.stonybrook.edu/~vicente/sbucaptions/

Show and Tell: A Neural Image Caption Generator(Google)

- arXiv: http://arxiv.org/abs/1411.4555

- github: https://github.com/karpathy/neuraltalk

- GitXiv: http://gitxiv.com/posts/7nofxjoYBXga5XjtL/show-and-tell-a-neural-image-caption-nic-generator

- github: https://github.com/apple2373/chainer_caption_generation

- blog(“Image caption generation by CNN and LSTM”): http://t-satoshi.blogspot.com/2015/12/image-caption-generation-by-cnn-and-lstm.html

Deep Visual-Semantic Alignments for Generating Image Descriptions

- intro: “propose a multimodal deep network that aligns various interesting regions of the image, represented using a CNN feature, with associated words. The learned correspondences are then used to train a bi-directional RNN. This model is able, not only to generate descriptions for images, but also to localize different segments of the sentence to their corresponding image regions.”

- arxiv: http://arxiv.org/abs/1412.2306

- slides: http://www.cs.toronto.edu/~vendrov/DeepVisualSemanticAlignments_Class_Presentation.pdf

Deep Captioning with Multimodal Recurrent Neural Networks (m-RNN)

- intro: “combines the functionalities of the CNN and RNN by introducing a new multimodal layer, after the embedding and recurrent layers of the RNN.”

- arxiv: http://arxiv.org/abs/1412.6632

- homepage: http://www.stat.ucla.edu/~junhua.mao/m-RNN.html

- github: https://github.com/mjhucla/mRNN-CR

Show, Attend and Tell: Neural Image Caption Generation with Visual Attention

Describing Videos by Exploiting Temporal Structure

Learning like a Child: Fast Novel Visual Concept Learning from Sentence Descriptions of Images

- arxiv: http://arxiv.org/abs/1504.06692

- homepage: http://www.stat.ucla.edu/~junhua.mao/projects/child_learning.html

- github: https://github.com/mjhucla/NVC-Dataset

Learning FRAME Models Using CNN Filters for Knowledge Visualization(CVPR 2015)

- arXiv: http://arxiv.org/abs/1509.08379

- project page: http://www.stat.ucla.edu/~yang.lu/project/deepFrame/main.html

- code+data: http://www.stat.ucla.edu/~yang.lu/project/deepFrame/doc/deepFRAME_1.1.zip

Generating Images from Captions with Attention

- arXiv: http://arxiv.org/abs/1511.02793

- github: https://github.com/emansim/text2image

- demo: http://www.cs.toronto.edu/~emansim/cap2im.html

Order-Embeddings of Images and Language

DenseCap: Fully Convolutional Localization Networks for Dense Captioning

Expressing an Image Stream with a Sequence of Natural Sentences (CRCN. NIPS 2015)

- nips-page: http://papers.nips.cc/paper/5776-expressing-an-image-stream-with-a-sequence-of-natural-sentences

- paper: http://papers.nips.cc/paper/5776-expressing-an-image-stream-with-a-sequence-of-natural-sentences.pdf

- paper: http://www.cs.cmu.edu/~gunhee/publish/nips15_stream2text.pdf

- author-page: http://www.cs.cmu.edu/~gunhee/

- github: https://github.com/cesc-park/CRCN

Image Captioning with Deep Bidirectional LSTMs

Video Captioning

Translating Videos to Natural Language Using Deep Recurrent Neural Networks

- arxiv: http://arxiv.org/abs/1412.4729

- project page: https://www.cs.utexas.edu/~vsub/naacl15_project.html

Sequence to Sequence – Video to Text(S2VT. ICCV 2015)

- arXiv: http://arxiv.org/abs/1505.00487

- project: http://vsubhashini.github.io/s2vt.html

- github: https://github.com/vsubhashini/caffe/tree/recurrent/examples/s2vt

- github: https://github.com/jazzsaxmafia/video_to_sequence

Jointly Modeling Embedding and Translation to Bridge Video and Language

Tools

CaptionBot (Microsoft)

- website: https://www.captionbot.ai/